Pentagon AI Integration and Anthropic: Ethics, Strategy, and the Future of Defence Technology Partnerships

By Aryamehr Fattahi | 20 February 2026

Summary

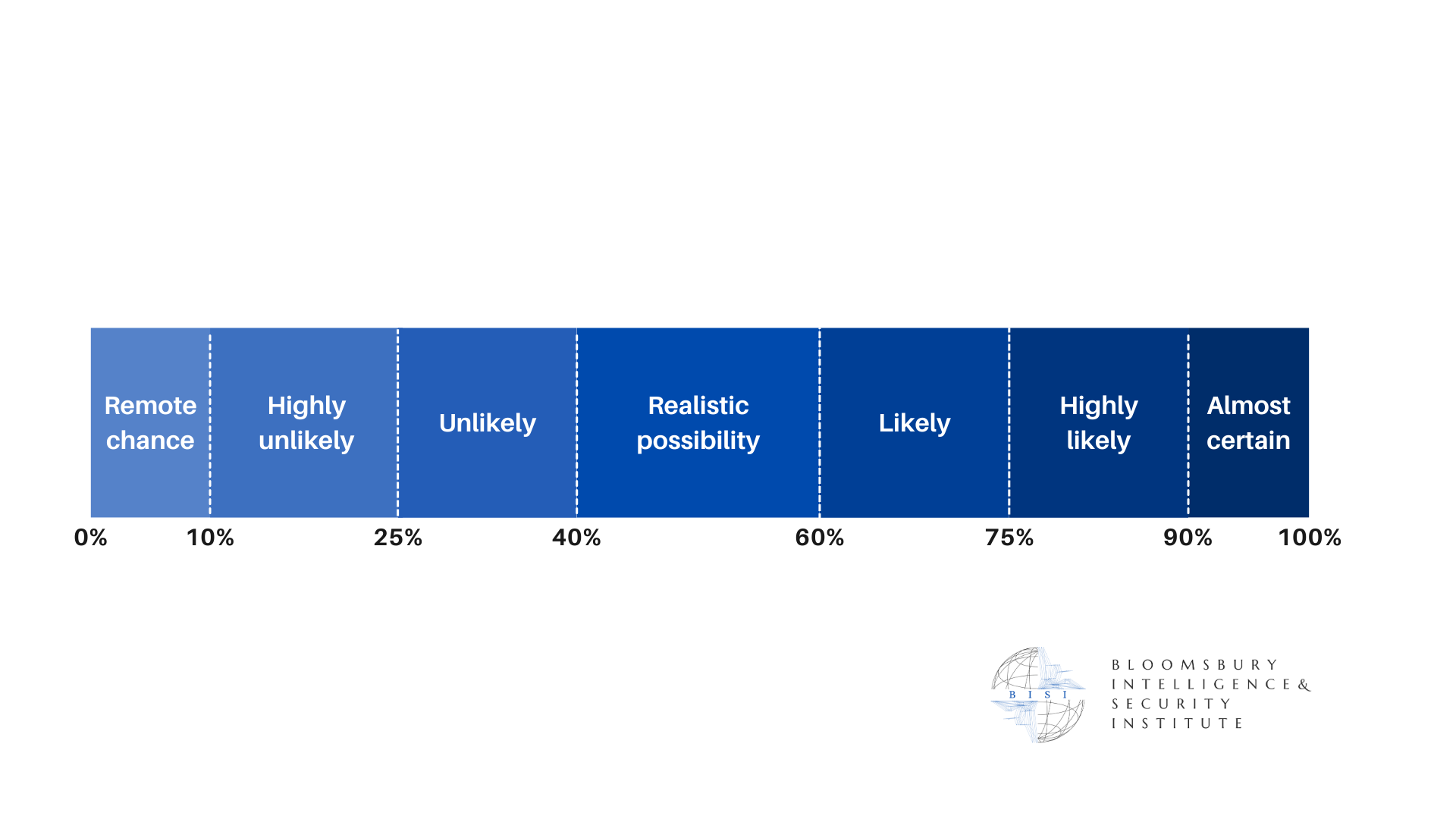

The United States (US) Department of War (DoW) issued its Artificial Intelligence (AI) Acceleration Strategy in January 2026, mandating an "AI-first warfighting force" and requiring all contracted AI models to be available for "all lawful purposes." Anthropic, whose Claude model is the only frontier AI currently deployed on classified military networks, refuses to permit mass domestic surveillance and fully autonomous weapons.

The dispute is almost certain to set a precedent for the terms under which Silicon Valley provides AI to national security customers. A "supply chain risk" designation against Anthropic would cascade across the broader technology sector. The standoff is accelerating competitor positioning by OpenAI, Google, and xAI, each of which has signalled greater willingness to accommodate DoW demands.

A negotiated compromise remains a realistic possibility in the near term, though political dynamics favour escalation. The DoW is highly likely to diversify its classified AI infrastructure away from sole dependence on Anthropic over the coming year.

Context

The AI Acceleration Strategy, released on 9 January 2026, marked the DoW's 3rd such strategy in 4 years, but its most aggressive. The 6-page memo established 7 "Pace-Setting Projects" spanning autonomous swarms, AI-enabled battle management, and department-wide generative AI deployment, with initial demonstrations due by July 2026. It mandated that contracted AI models be deployable within 30 days of public release and usable for "all lawful purposes."

It was confirmed that this standard applies equally to all 4 frontier AI companies awarded contracts of up to USD 200m each in July 2025: Anthropic, OpenAI, Google, and xAI. However, Claude is the only frontier AI model operating on classified Pentagon networks, deployed via Palantir's AI Platform. Anthropic maintains 2 non-negotiable conditions: no mass domestic surveillance of Americans and no fully autonomous weapons without meaningful human oversight. Pentagon officials on 15 February 2026, described these categories as containing "considerable grey area" and called case-by-case negotiation "unworkable."

The confrontation escalated rapidly. Axios reported on 16 February 2026 that the Pentagon was "close" to severing ties and designating Anthropic a "supply chain risk," a classification normally reserved for foreign adversaries. Of the 4 contracted companies, xAI has reportedly agreed to "all lawful use" at any classification level, while OpenAI and Google have shown flexibility on unclassified work but continue negotiating classified access terms.

The dispute gained additional urgency after the Wall Street Journal reported that Claude was used during the January military operation to capture Venezuelan President Nicolás Maduro via Palantir's classified platform, though the extent of its role remains disputed. Internationally, the UN Convention on Certain Conventional Weapons is scheduled to hold its 7th Review Conference in November 2026, the putative deadline for progress on a lethal autonomous weapons instrument supported by over 120 states.

Implications and Analysis

The Pentagon-Anthropic standoff extends far beyond a single contract dispute. Its resolution will shape the terms of engagement between Silicon Valley and national security institutions for years to come, with cascading effects across multiple domains.

The strategic paradox of the supply chain risk threat

The most consequential element is not the potential loss of a USD 200m contract but the "supply chain risk" designation. This would require every DoW contractor to certify that it does not use Claude, forcing compliance decisions across the defence industrial base and into finance, healthcare, and enterprise technology.

This creates a strategic paradox. The DoW acknowledges that replacing Claude on classified networks would be disruptive because competing models are not yet certified for that environment. Classified system certification requires air-gapped security engineering, authority-to-operate approvals, and integration work spanning 6 to 18 months at a minimum. The designation would therefore damage the DoW's own operational capability in the short term. Its primary value is almost certainly as leverage, though political pressure to demonstrate resolve introduces the realistic possibility of miscalculation.

The shifted ethics landscape since Project Maven

The dispute has exposed a divergence among frontier AI companies that would have been unthinkable in 2018, when over 4,000 Google employees signed petitions against Project Maven and the company declined to renew the contract. Google reversed its post-Maven weapons and surveillance prohibitions in February 2025. OpenAI removed its explicit ban on military applications in January 2024. xAI is now the only frontier lab competing in the DoW's autonomous drone swarm contest.

This repositioning carries its own risks. Companies accepting expansive military terms are likely to face internal backlash, though large-scale revolts appear unlikely given the capital intensity of frontier AI development and the reduced leverage of individual employees. Conversely, firms maintaining strict ethical red lines test whether differentiation on governance can remain commercially viable in a defence-driven environment. The outcome will likely influence hiring dynamics, retention, and investor sentiment across the sector, as well as shape how defence contractors structure partnerships to accommodate differing provider constraints.

Pentagon officials have acknowledged that the confrontation with Anthropic serves as a useful mechanism for setting the tone in parallel negotiations with all 3 other companies. The "all lawful purposes" standard, once codified, is likely to become the default expectation for all future defence AI procurement. The competitive advantage is likely to accrue to firms aligning with this doctrine, creating a 2-tier market separating companies inside the defence AI ecosystem from those outside it.

Geopolitical ramifications

For China, the dispute offers both intelligence value and strategic advantage. Beijing's Military-Civil Fusion strategy faces no equivalent corporate resistance, providing a structural edge in the speed of military AI deployment even where the underlying technology trails. The People's Liberation Army's "intelligentisation" programme targets 2027 for integrated AI-enabled warfare. Any visible fracture between America's leading AI companies and its defence establishment undermines the narrative of a unified US technology base.

For allied nations building defence AI architectures around US models, an "all lawful purposes" baseline is likely to conflict with stricter European and Commonwealth regulatory frameworks, complicating interoperability and procurement decisions. This is particularly relevant regarding the North Atlantic Treaty Organisation (NATO) and other alliances, where different states are likely to have different requirements and capacities for responsible AI.

The Pentagon's push for fewer restrictions on military AI makes meaningful US engagement with international LAWS regulation a remote chance, further widening the gap between diplomatic ambition and operational reality. This is likely to embolden adversaries and complicate relationships with allies who favour multilateral governance frameworks.

Precedent for AI governance globally

The outcome will almost certainly establish lasting precedent for AI company-government relationships worldwide. If Anthropic capitulates, it signals that ethical red lines are negotiable under sufficient pressure. If Anthropic prevails or maintains its position while competitors gain market share, it tests whether differentiation on ethics is commercially viable. Either outcome reshapes the calculus for every AI company considering defence work.

Forecast

Short-term (Now - 3 months)

A negotiated accommodation on specific use-case definitions is a realistic possibility, though Anthropic is unlikely to abandon its 2 core red lines. The DoW is likely to accelerate the onboarding of competing models to unclassified platforms.

Medium-term (3 - 12 months)

The DoW achieving multi-model classified capability by late 2026 is likely, reducing Anthropic's leverage. Internal pressure on engineers at competing labs is a realistic possibility, though large-scale revolts akin to 2018 are unlikely.

Long-term (>1 year)

This dispute is almost certain to establish lasting precedent for AI company-government relationships globally. A formal international instrument restricting lethal autonomous weapons before 2028 remains highly unlikely.