Australia's Social Media Ban: A Test for Global Digital Governance

By Aryamehr Fattahi | 15 December 2025

Summary

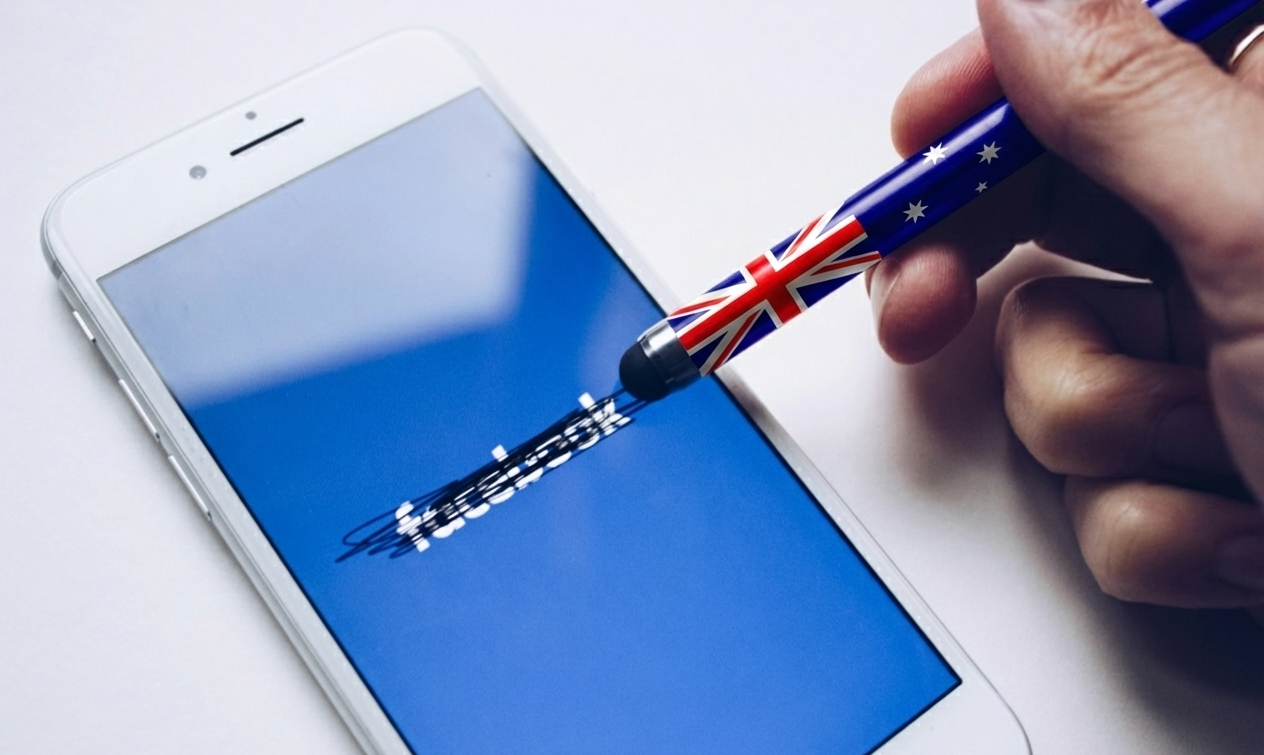

Australia became the first country to enforce a nationwide ban preventing under-16s from holding accounts on major social media platforms on 10 December 2025, placing regulatory responsibility on technology companies rather than parents or children.

The legislation faces significant implementation challenges, including legal challenges from Reddit and civil liberties groups, enforcement difficulties through age verification technology, and concerns about pushing young users toward less regulated platforms.

However, proponents argue that potential mental health benefits and reduced exposure to cyberbullying, harmful content, and addictive algorithms justify the intervention.

Australia's precedent is highly likely to trigger similar legislation globally, with Denmark, Malaysia, Norway, and several other nations actively pursuing comparable restrictions, positioning this as a critical test case for digital governance worldwide.

Context

On 10 December 2025, Australia became the first country to enforce a comprehensive social media minimum age requirement for children under 16. The new legislation, introduced under The Online Safety Amendment (Social Media Minimum Age) Act 2024, passed on 29 November 2024, obliges platforms including: Facebook, Instagram, TikTok, YouTube, Snapchat, Reddit, X, Threads, Twitch, and Kick, to take "reasonable steps" to prevent minors from creating or maintaining accounts. Non-compliant companies possibly facing fines of up to AUD 49.5m (USD 33m), the legislation also prohibits parents from granting consent to circumvent the restrictions, although neither children nor families will face penalties for possible violations. The legislation emerged from growing concerns over cyberbullying, online predators, and youth mental health deterioration linked to social media use. Public polling shows broad but cautious support.

Platforms are required to employ age verification methods, including facial age estimation via selfies, inference from online activity, or uploaded identification documents. The eSafety Commissioner, Julie Inman Grant, issued compulsory information notices to 10 platforms on 11 December 2025, demanding data on deactivated accounts. According to a government-commissioned study, 96% of Australian children aged 10-15 use social media, with over 1m accounts expected to be affected. An independent review of the legislation's operation is mandated within 2 years. Internationally, Denmark has secured cross-party agreement for restrictions on under-15s potentially becoming law by mid-2026, while Malaysia plans implementation in early 2026.

Legal Challenges and Constitutional Questions

The ban faces 2 High Court challenges. On 12 December 2025, Reddit filed a suit, arguing the law violates Australia's implied constitutional freedom of political communication. Reddit contends that under-16s will become voters "within years if not months" and restricting their access to online discussion spaces hinders political engagement. The platform also argues it should not be classified as an "age-restricted social media platform" given its forum-based structure.

A separate prior challenge filed on 26 November 2025 by the Digital Freedom Project on behalf of 2 teenagers raises similar constitutional arguments, with preliminary hearings scheduled for late February 2026. Australian Health Minister Mark Butler compared Reddit's challenge to tactics historically employed by tobacco companies during a public statement, framing it as profit protection rather than rights advocacy.

Implications and Analysis

The ban establishes a significant precedent for state intervention in digital spaces, fundamentally shifting responsibility from parents to corporations for managing children's online access. This regulatory approach mirrors historical interventions such as seatbelt mandates and tobacco warnings, framing social media as a public health concern requiring government action rather than individual management. However, unlike physical products with tangible age-gating mechanisms, digital services present unique enforcement complexities that will test the viability of this regulatory model.

Potential Mental Health and Developmental Benefits

The legislation responds to mounting evidence linking adolescent social media use to adverse mental health outcomes. Research indicates that children with smartphones at age 12 demonstrate higher rates of depression, obesity, and sleep insufficiency compared to their peers without early device access. By restricting platform access during critical developmental years, the ban is highly likely to reduce exposure to several documented harms like cyberbullying, unwanted sexual solicitation online, algorithmically amplified content and addictive design features.

Additionally, removing compulsive platform engagement is likely to reclaim time for developmentally beneficial activities, including face-to-face peer interaction, physical exercise, reading, and skills development. Sleep quality, consistently compromised by screen use and notification-driven interruptions, may also improve with reduced platform access. Parents report feeling disempowered against algorithm-driven engagement. This means that the legislation provides regulatory support for household limits that previously required constant parental enforcement against sophisticated persuasion engineering and social media algorithms.

The restriction also represents a significant assertion of governmental authority over technology companies that have largely self-regulated youth access. Australia's willingness to impose substantial penalties signals that governments can meaningfully constrain platform behaviour, potentially catalysing broader regulatory action globally. This challenges the prevailing assumption that technology companies are effectively ungovernable due to their scale, technical complexity, and transnational operations.

Enforcement Architecture and Circumvention Dynamics

The enforcement framework of the legislation contains structural vulnerabilities that undermine its stated objectives. By delegating verification methodology to platforms themselves, the government has created inconsistent standards across services. For instance, Meta's mass account deactivation approach differs fundamentally from YouTube's read-only model, producing an uneven regulatory landscape where identical users face different treatment depending on platform choice. This is particularly relevant since platforms like YouTube and Reddit also serve as a large storage of educational information. This means that these platforms should not be treated equally in relation to the restrictions.

The early implementation process has also exposed substantial enforcement gaps. Reports from the first days indicate that teenagers retained access through previously falsified birthdates, VPN usage, or AI-generated facial images used to bypass verification systems. These early shortcomings highlight the complexities in developing a robust system for age-based access restriction.

Early evidence also suggests that the ban produces displacement rather than digital abstinence. Downloads of alternative applications Yope and Lemon8 surged 251% and 88% respectively, within days of implementation. This migration pattern carries significant safety implications, as smaller platforms typically lack resources for robust content moderation, safety reporting mechanisms, or cooperation with law enforcement. The ban will also likely inadvertently funnel vulnerable users toward precisely the unmoderated environments where exploitation thrives.

Privacy Paradox and Data Security Implications

The age verification requirement also creates a fundamental paradox: Protecting children necessitates collecting substantially more personal data from all users. Facial estimation, government ID uploads, and behavioural inference require platforms to enhance their profiling capabilities considerably. This effectively mandates the construction of surveillance infrastructure that, once established, can be repurposed for commercial exploitation, state surveillance, or malicious access.

Furthermore, migration to encrypted messaging applications and private group chats removes youth activity from any regulatory visibility. Parents who previously monitored children's public social media presence lose insight into digital interactions conducted through less transparent channels. The ban can therefore reduce parental oversight capacity whilst providing minimal compensating protection, an outcome directly contrary to legislative intent.

The elimination of parental consent provisions also removes family discretion in contexts where supervised access might benefit development. A 15-year-old managing a small business, pursuing journalism, or engaging in political advocacy loses professional tools without individualised assessment. The legislation assumes uniform vulnerability across diverse circumstances, applying identical restrictions to a 10-year-old and a 15-year-old despite substantially different developmental stages and demonstrated digital competencies. More importantly, this will create a sudden exposure to social media after the age of 16 instead of a gradual one, causing teenagers to be overwhelmed instead of learning to adapt.

Global Regulatory Precedent and Geopolitical Dimensions

Australia's action has catalysed substantial international movement. Denmark secured cross-party agreement in November 2025 for restrictions on under-15s, potentially becoming law by mid-2026. Malaysia plans implementation in early 2026, while Norway, France, Spain, Greece, Romania, and New Zealand are advancing similar proposals. This positions Australia as a regulatory laboratory whose outcomes will shape global digital governance frameworks.

The proliferation of divergent national standards creates compliance complexity for multinational platforms. Companies face fragmented obligations across jurisdictions with varying age thresholds, verification requirements, and penalty structures. This can, with a realistic possibility, drive toward either lowest-common-denominator global implementation or market withdrawal from demanding regions, with significant implications for digital access equity between nations.

Commercial and Innovation Consequences

The ban disrupts established platform business models reliant on early user acquisition. Social media companies invest substantially in capturing users during adolescence, when brand loyalties and usage patterns form. Delayed access until age 16 compresses the customer acquisition window and likely permanently alters market dynamics. More importantly, Digital skills development occurring through platform engagement shifts to later ages, with uncertain implications for workforce readiness.

Forecast

Short-term (Now - 3 months)

High Court proceedings will highly likely dominate the policy landscape, with Reddit's constitutional challenge testing the legislation's legal foundations. Enforcement inconsistencies will almost certainly generate political pressure for regulatory clarification, while alternative platforms will likely face classification reviews.

Medium-term (3-12 months)

Denmark and Malaysia are highly likely to implement announced restrictions, creating an expanding bloc of nations with youth social media bans. The mandated independent review will likely expose implementation gaps, prompting legislative amendments.

Long-term (>1 year)

Australia's experience will highly likely determine the trajectory of global youth digital regulation. If constitutional challenges fail and enforcement demonstrates measurable harm reduction, widespread international adoption is likely by 2030. Demonstrated ineffectiveness could alternatively redirect policy toward platform design mandates and digital literacy investment.